- Products ProductsLocation Services

Solve complex location problems from geofencing to custom routing

PlatformCloud environments for location-centric solution development, data exchange and visualization

Tracking & PositioningFast and accurate tracking and positioning of people and devices, indoors or outdoors

APIs & SDKsEasy to use, scaleable and flexible tools to get going quickly

Developer EcosystemsAccess Location Services on your favorite developer platform ecosystem

- Documentation DocumentationOverview OverviewServices ServicesApplications ApplicationsDevelopment Enablers Development EnablersContent ContentHERE Studio HERE StudioHERE Workspace HERE WorkspaceHERE Marketplace HERE MarketplacePlatform Foundation and Policy Documents Platform Foundation and Policy Documents

- Pricing

- Resources ResourcesTutorials TutorialsExamples ExamplesBlog & Release Announcements Blog & Release AnnouncementsChangelog ChangelogDeveloper Newsletter Developer NewsletterKnowledge Base Knowledge BaseFeature List Feature ListSupport Plans Support PlansSystem Status System StatusLocation Services Coverage Information Location Services Coverage InformationSample Map Data for Students Sample Map Data for Students

- Help

Pipelines

A pipeline is simply a Java or Scala application that reads from one or more input sources, applies a bit of processing, and outputs the results to a single end point. In HERE Workspace, you have the opportunity to enrich your data with our map and traffic data sets.

In this topic

- Why use Pipelines

- Pipeline Components

- Pipeline Features

- Pipeline Development Workflow

- Pipeline Creation

- Operational Requirement

- Catalog Compatibility

- Pipeline Deployment

- For Developers

Why use Pipelines

Two of our most common use cases are:

- Map compilation - You combine multiple batch data sources into a single customized map.

- Crowd-sourced map updates - You maintain continuously updated streaming data that allows you to issue traffic advisories.

Here are some other scenarios where pipelines might be a good fit for you:

- Read vehicle sensor data from an automotive fleet, enhancing a high-definition map in support of autonomous driving.

- Aggregate and apply analytics to eCommerce data, to better understand merchandise and sales trends.

- Supplement your Business Intelligence tools, or apply Machine Learning to production systems to dynamically deliver the best products or offers to your customers.

- Aggregate data with your proprietary algorithm for optimal ad bidding.

- Read vehicle data to understand road conditions and share safety alerts with the driver.

For an introductory overview of pipelines, see the following video.

Pipeline Components

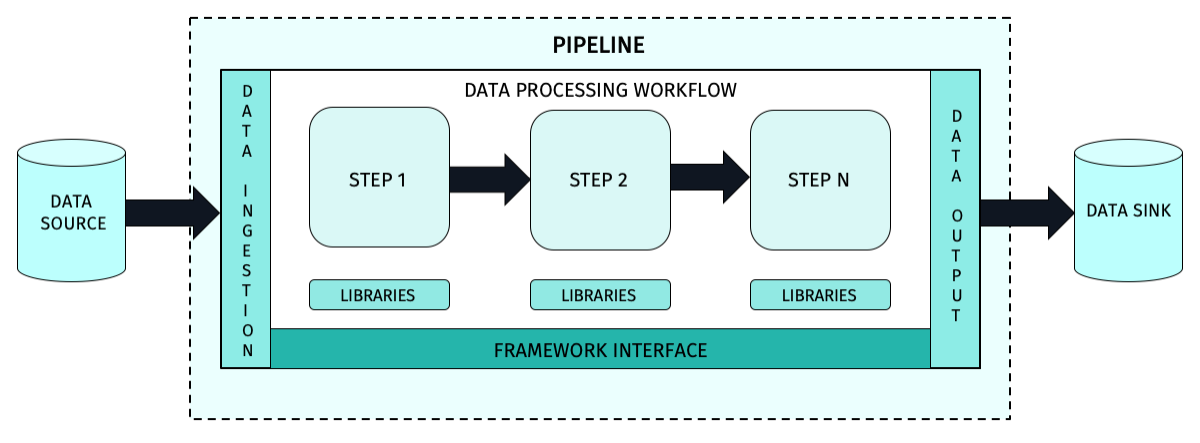

The pipeline application is compiled into a JAR file for deployment in HERE Workspace. A pipeline can run on a stream processing framework (Apache Flink) or batch processing framework (Apache Spark). The pipeline application has two basic components:

-

The framework interface This is an artifact of the data being processed and the selected processing framework. The data ingestion and data output are also artifacts. They are basic components required for pipeline execution. The basics of a pipeline development project are predefined based on Maven archetypes supplied in the HERE Data SDK for Java and Scala.

-

The data processing workflow This consists of the hard-coded data transformation algorithms that make each pipeline unique, especially when using HERE libraries and resources. The specialized algorithms in the pipeline are required to transform the data input into a useful form in the data output.

The workflow results are supplied as output to the data sink for temporary storage. The workflow is designed to execute a unique set of business rules and algorithmic transformations on the data, according to its design. Run-time considerations are typically addressed as a set of specific configuration parameters applied to the pipeline when it is deployed and a job is initiated.

Note

The application must be pre-configured exclusively for use in a stream or batch processing environment, but never in both. All pipelines must have at least the following components that are external to the pipeline itself:

- One data source (catalog), although many input data sources are supported

- One data sink (catalog)

Pipeline Features

- Pipelines can contain any combination of data processing algorithms in a reusable JAR file package.

- Pipelines can be built using Scala or Java, based on a standard pipeline application template.

- Pipelines are compiled and distributed as fat JAR files for ease of management and deployment.

- Pipelines can be highly specialized or very flexible, based on how the data processing workflow is designed.

- Pipelines are deployed with a set of run-time parameters that allow as much pipeline flexibility as needed.

- The pipeline service uses standard framework interfaces for processing streaming data (Apache Flink) and for batched data (Apache Spark).

- Pipelines can be chained by using the output catalog of one pipeline as the input catalog of another pipeline. (At this time, there are no tools included in HERE Workspace specifically to implement such a scheme.)

- Pipelines can be deployed and managed from (1) the Command Line Interface (the OLP CLI), (2) through the portal, or (3) by a custom user application using the pipeline service REST API.

Pipeline Development Workflow

Pipelines go through a design and implementation process before they can be used. After the pipeline is designed, it is implemented as an executable JAR file. These JAR files can be used by HERE Workspace as needed. Each pipeline JAR file must accommodate the design requirements and restrictions of either a stream or a batch execution environment.

- If a stream environment is selected, the JAR file must be executable on the Apache Flink framework embedded in the pipeline.

- If a batch environment is selected, the JAR file must be executable on the Apache Spark framework embedded in the pipeline.

Flink and Spark each have specific requirements for their JAR file designs.

Pipeline Creation

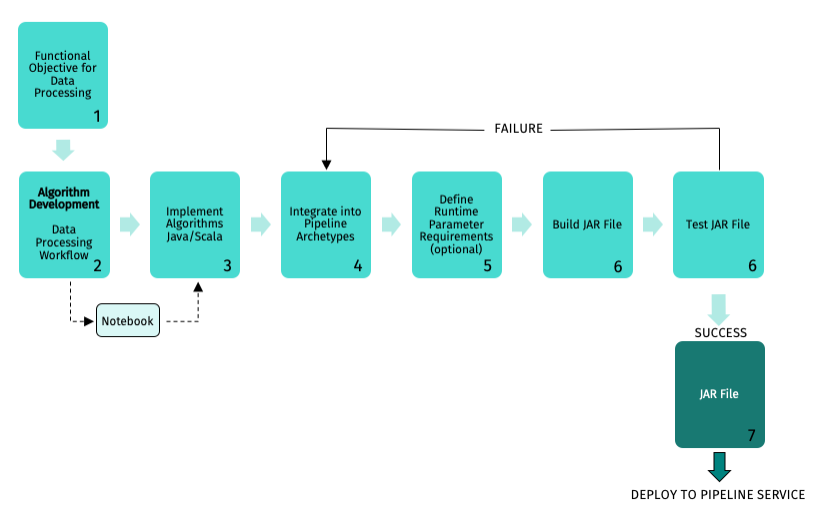

A new pipeline is typically created using the following process:

- Create a functional objective or business goal for the pipeline, typically defining a basic data workflow.

- Develop a set of algorithms for manipulating the data to achieve the objective, in either Java or Scala to be compatible with the pipeline templates.

- Integrate the algorithms into a pipeline application targeting a streaming or batch processing model. Maven archetypes are used to build the pipeline.

- Define any run-time parameters required by the algorithms during the integration process.

- Create and test a fat JAR file. This fat JAR file, with any associated libraries or other assets, is the pipeline deliverable that is deployed onto the pipeline.

Operational Requirement

An operational requirement describes the individual pipelines and catalogs to be used and the execution sequencing. This is the unique topology that must be deployed and can include as many individual pipeline stages as the computing environment can support. Pipelines can be designed for either single or multiple deployments.

Catalog Compatibility

For every pipeline, there is a data source and an output catalog to contain the data processed by the pipeline. That output catalog must be compatible with the data transformations done in the pipeline. The following shows a range of possible variations in input and output catalogs:

Pipeline Deployment

During deployment, the data sources and data destinations are defined, which is required to implement more complex topologies. As the pipelines execute, their activity is monitored and logged for later analysis. Additional tools can be used to generate alerts based on events during data processing.

Only a configured Pipeline Version can process data on HERE Workspace. The following table describes operational commands that can be directed to a pipeline version:

| Command | Description |

|---|---|

| Activate | Starts data processing on a deployed pipeline version. |

| Delete | Removes a deployed pipeline version. |

| Pause | Freezes pipeline version operation until a resume command is issued. |

| Resume | Restarts a paused pipeline version from the point where execution was paused. |

| Cancel | Terminates an executing pipeline version from processing any data. |

| Upgrade | Replaces a pipeline version with another pipeline version. |

For Developers

If you are a developer and you want to start using the HERE Workspace, note the following:

-

HERE Workspace is designed to build distributed processing pipelines for location-related data. Your data is stored in catalogs. Processing is done in pipelines, which are applications written in Java or Scala and run on an Apache Spark or Apache Flink framework.

-

HERE Workspace abstracts away the management, provisioning, scaling, configuration, operation, and maintenance of server-side components, and lets you focus on the logic of the application and the data required to develop a use case. HERE Workspace is aimed mainly at developers doing data processing, compilation, and visualization, but also allows data analysts to do ad-hoc development.